Contents

H2: What’s a Rich Text element?

The rich text element allows you to create and format headings, paragraphs, blockquotes, images, and video all in one place instead of having to add and format them individually. Just double-click and easily create content.

H3: Static and dynamic content editing

A rich text element can be used with static or dynamic content. For static content, just drop it into any page and begin editing. For dynamic content, add a rich text field to any collection and then connect a rich text element to that field in the settings panel. Voila!

H1: This is a Heading 1

This is some paragraph. lorem epsum.

This is a fig caption. This is how it will look like under a video frame as a description.

H4: How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

H5: Sample text is being used as a placeholder. Sample text helps you understand how real text may look. Sample text is being used as a placeholder for real text that is normally present.

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

H6: How to customize formatting for each rich text

Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

Block Quote: Headings, paragraphs, blockquotes, figures, images, and figure captions can all be styled after a class is added to the rich text element using the "When inside of" nested selector system.

This is a heading 3.

- Sample text is being used as a placeholder.

- Sample text is being used as a placeholder.

- Sample text is being used as a placeholder.

This is a heading 2.

- Sample text is being used as a placeholder.

- Sample text is being used as a placeholder.

- Sample text is being used as a placeholder.

# clone openpilot into your home directory

cd ~

git clone --recurse-submodules https://github.com/commaai/openpilot.git

# setup ubuntu environment

openpilot/tools/ubuntu_setup.sh

# build openpilot

cd openpilot && scons -j$(nproc)

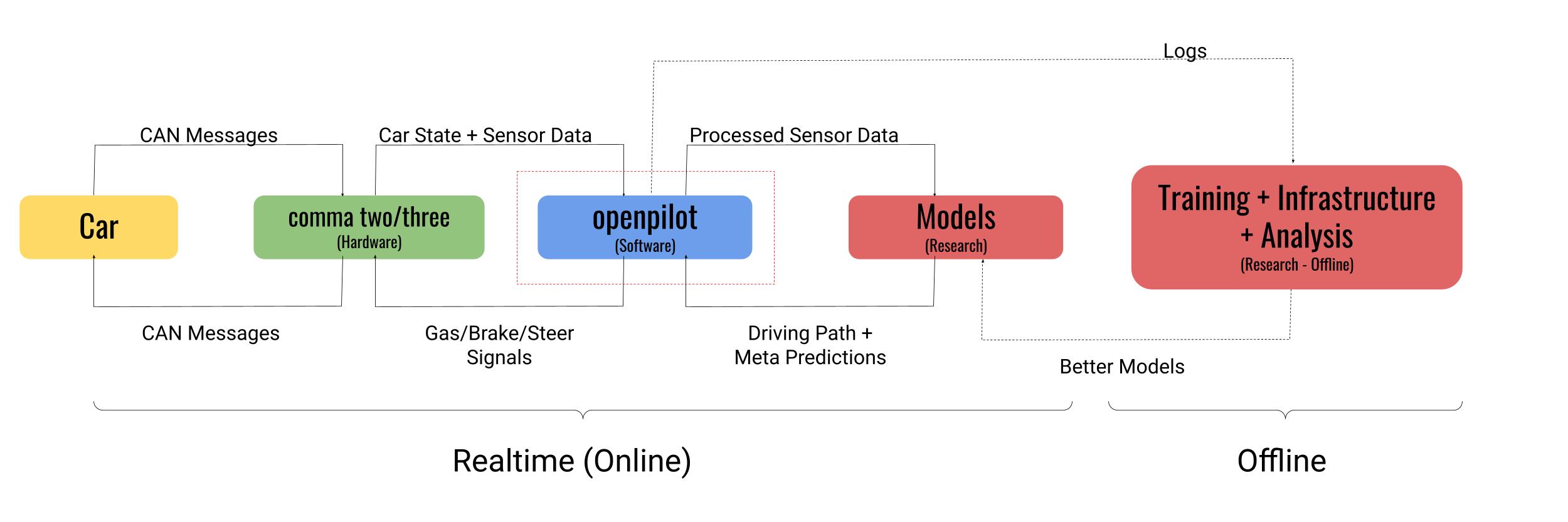

In the past, we’ve had blog posts every two years, describing the various building blocks of openpilot that work together to drive your car. With each iteration, you’ll notice that openpilot has simultaneously become much simpler (service/car abstraction, end-to-end) and much more complex (infrastructure, testing, models), while getting undeniably better. It’s been two years since that last post, so in keeping with tradition, here we go - how does openpilot work?

How Does openpilot Work?

openpilot is comma’s open source driving assistant that, well, drives your car! Essentially, openpilot reads data from various sensors (camera, IMU, steering wheel angle sensor, GNSS receiver, etc.), processes their outputs and relays relevant inputs to a large neural network. It then converts the neural network’s outputs into actionable commands for the car’s actuators to execute. Making sure drivers pay attention, when it is engaged, is also an important function of openpilot.

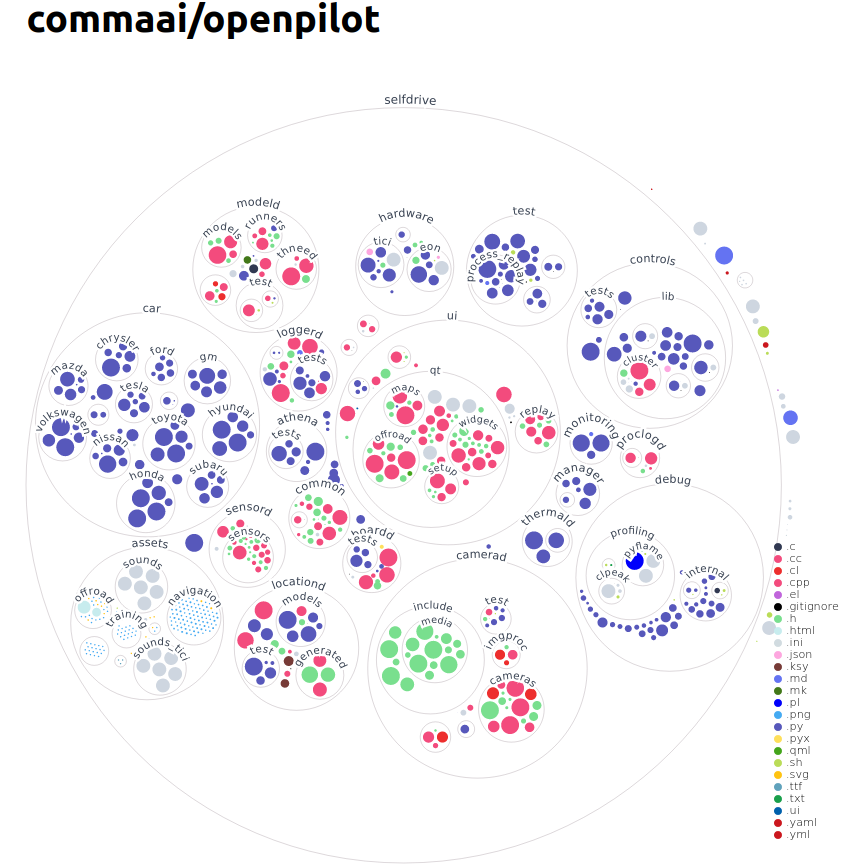

To make openpilot work, we have also developed and open-sourced a few other libraries like:

- opendbc: Library to interpret CAN bus traffic

- panda: Car interface

- cereal: Publisher/subscriber messaging specification for robotic systems

- laika: GNSS processing library

- rednose: Kalman filter library, specifically for visual odometry, SLAM, etc.

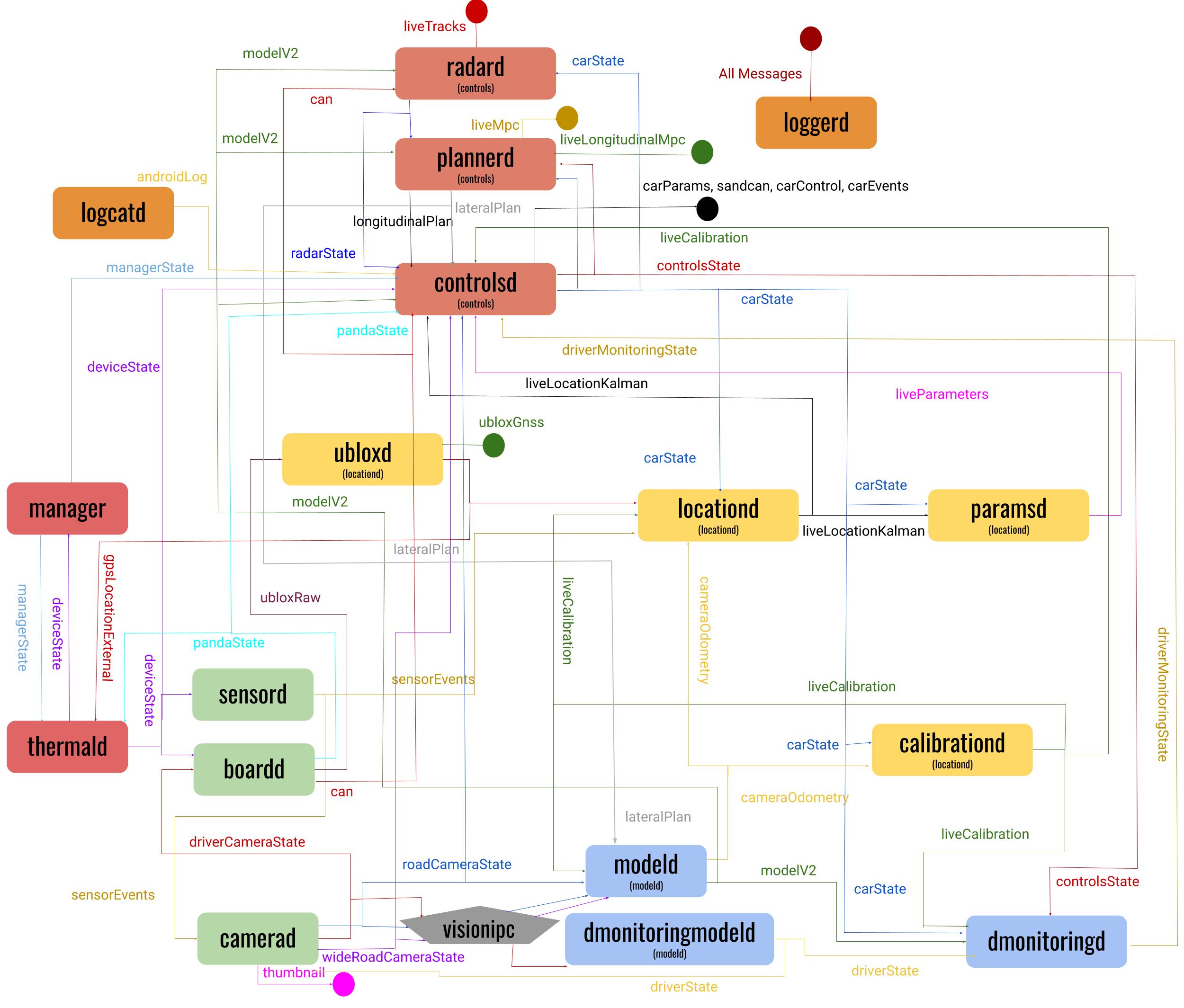

openpilot is comprised of various services that communicate with each other with a single publisher, multiple subscriber inter-process messaging system (specified in cereal). Let’s dive into the most important services in openpilot and understand their function.

A Tour Through openpilot

The services can broadly be divided into:

- Sensors and Actuators

- Neural Network Runners

- Localization and Calibration

- Controls

- System, Logging, and Miscellaneous services

Sensors and Actuators

- boardd

This service manages the interface between the main device and the panda, which communicates with the peripherals. These peripherals include the GNSS module, infrared LEDs (for nighttime driver monitoring), and the car.

- camerad

camerad manages both the road and driver camera (plus an additional wide-road camera on the comma three), and handles autofocus and autoexposure. camerad writes the image data directly to visionipc which can efficiently pass images around with low overhead.

- sensord

sensord configures and reads the rest of the sensors (gyro, accelerometer, magnetometer, and light sensors).

Neural Network Runners

- modeld

This service reads in the image stream from visionipc into the main driving neural network. Using this data along with the desire inputs (changing lanes, etc.), the neural network tries to predict where and how to drive, under the given conditions. modeld runs the supercombo model, which outputs the desired driving path along with other metadata including lane lines, lead cars, road edges, and more.

- dmonitoringmodeld

Driver monitoring is one of the most salient features of openpilot. We make sure that the drivers are paying adequate attention to the road and are capable of taking over in case openpilot needs them to. This service runs the dmonitoring_model using the driver-facing camera’s image stream and predicts the driver’s head pose, whether their eyes are open or closed, and whether they are wearing sunglasses.

- dmonitoringd

This service contains the logic for assessing if the driver can take over if necessary. If not, we alert the driver. This decision is based on outputs of the dmonitoring model, information about the scene from the driving model, and a few more parameters. Drivers are blocked from engaging openpilot if they were distracted for extended periods of time.

Localization and Calibration

- ubloxd

This service parses the GNSS data, which is then used for localization.

- locationd

This service is responsible for localizing the car in the world. Localization is an essential step in accurately describing the state of your car and its interaction with the world. This is how openpilot knows your speed, if you’re in a banked turn, or if you’re going uphill. We combine noisy data from an array of sensors in a Kalman Filter (live_kf), and publish the filtered values. This localizer outputs the position, orientation, velocity, angular velocity and acceleration of the car.

- calibrationd

The input to the neural network driving model is warped into the calibrated frame, which is aligned with the pitch and yaw of the vehicle. This normalizes the image stream to account for the various ways in which people mount their devices on their windshields.

- paramsd

There are a host of parameters that are specific to car models (mass, steering ratio, tire stiffness, steering offset, wheel-to-center-of-mass-distance, etc.) that are necessary to convert a driving path to the signals that the car understands, like a steering angle. We hard-code a lot of these values as car-model-specific parameters, and treat them as constants. But some of these parameters are either inaccurate, change during the life of a car, or even during a drive depending on road conditions. So, we need to accurately estimate these parameters in order to have better control over the way the car responds to inputs. We use a Kalman Filter (car_kf) to achieve this, assuming a single track vehicle model.

Controls

- radard

This service interprets the raw data from the various radars present in different models of cars and converts them to a canonical format.

- plannerd

Planning is separated into two parts - lateral planning (steering) and longitudinal planning (gas/brake). Both use an MPC (Model Predictive Control) solver to ensure the plans are smooth and optimize some reasonable costs.

The driving neural network predicts where the car ought to be, but the lateral planner figures out how to get there. Using the neural network’s path prediction (and sometimes lane-line prediction), and an MPC solver, the lateral planner estimates how much the car should be turning (curvature) over the next few seconds.

Longitudinal planning still relies mostly on lead cars. It takes the fused (neural network + radar) estimates of lead cars and the desired set speed, feeds it into an MPC solver, and computes a good acceleration profile for the next few seconds.

- controlsd

This is the service that actually controls your car. It receives the plan from plannerd, in the form of curvatures and velocities/accelerations, and converts it to control signals. These vehicle agnostic targets (acceleration and steering angle) are then converted to vehicle specific CAN commands that work for that car’s API, through a closed loop control system running at 100Hz. controlsd also parses the raw CAN data from the car and publishes it in a canonical format.

System, Logging, and Miscellaneous services

- manager

This manages the starting and stopping of all the processes described above.

- thermald

This service monitors various things about the state of the device running openpilot, such as CPU and memory usage, power supply status, system temperatures (hence the name), and more. thermald also monitors various conditions under which openpilot should go onroad, our term for the state where all the driving processes are running.

- loggerd / logcatd / proclogd

These services handle all of openpilot’s logging. Compressed video and sensor data is logged as training data, to keep improving the neural networks. All cereal messages, system crash reports, etc. are also logged so we can understand and fix failures.

- athenad

Athenad sets up a websocket connection to the comma.ai servers, and handles all the device-related requests from connect.comma.ai. The device can be reached by sending REST API calls to athena.comma.ai, see the docs for more information. Examples of possible API calls are requesting battery voltage, setting a navigation destination, car location or requests to upload files.

- ui

This service handles everything shown to the user. While the car is off, it contains a training guide to onboard new users, shows system status, and exposes some settings. While the car is on, the road-facing camera stream is shown with overlaid visualizations of the driving path, lane lines, and lead cars.

There are a few other services like clocksd, timezoned, updated, etc. that support the smooth functioning of openpilot.

Hardware

To run openpilot you need some compute and a panda to interface with the vehicle. openpilot now supports three hardware platforms: comma two, comma three (both of which have an integrated panda), and a Linux PC (requires a separate panda). The comma two runs NEOS, our stripped down Android fork, while the comma three runs AGNOS, our new Ubuntu-based operating system. We also launched the red panda, the future of the panda platform. It supports CAN-FD and has a 4x faster CPU.

openpilot has a nice hardware abstraction layer to enable high quality ports for other hardware.

We hope this was a good starting point for you to go tinker around. For more detailed information, be sure to watch our COMMA_CON talks. What else would you like to know about how openpilot works? Tweet at us!